For hundreds of years, dead bodies (cadavers) have taught medical students about human anatomy. In cadaver labs, students dissect, touch, rotate, and explore organs in hands-on experiences that make knowledge stick for a lifetime.

Unfortunately, these experiences are out of reach for most of us. Cadaver labs are expensive to run and cadavers are in limited supply, so non-medical students have to settle for secondary learning experiences like iPad apps and websites. These experiences are good, but not nearly as effective as the hands-on learning experiences students get in the lab.

That’s why we created CadaVR, a “living” virtual reality cadaver lab that emulates a real cadaver lab, minus the crowd (4-8 students per cadaver), unforgiving smell, and expensive cost. Not only does CadaVR let students use their hands and other senses to learn about anatomy, but it also has things that are not available in physical labs, such as a simulation of how the heart beats. (If you’re a medical student and you detect a heartbeat in your cadaver, you should probably run!)

At this stage of the project, we’ve designed CadaVR to teach students very simple facts about the heart through its task-driven interface. We’re also developing a lesson-building platform that gives content curators the ability to easily create lessons from within virtual reality using their hands and voice. In this post, we’ll take a look at some of the capabilities that we’re building, and our long-term vision for VR education.

CadaVR is built on the web, so you can access anywhere, anytime. Get the project on the Leap Motion Developer Gallery!

Interaction Design

![cadavr-2]()

Grabbing. You can reach out into the virtual world and grab objects with your hands. This lets you touch anatomy similar to how you would in a physical cadaver lab, which teaches you how large structures are, as well as where they are located in the body.

![cadavr-6]()

Scaling. While grabbing an object with one hand, you can use your other hand to make the object bigger or smaller. This makes it easier to learn about small anatomical structures, such as veins, arteries and small muscles. It’s also just fun to play around with. Have you ever seen a heart the size of your face?

The grabbing and zooming logics have each been modularized into a single script so they can be reused. You can find them on GitHub here and here, along with several examples. Keep in mind that all code is currently in the prototype stage!

![cadavr-5]()

Tasks. Students in the cadaver lab generally learn by completing specific tasks, so we replicated that experience in CadaVR. When a task is completed, CadaVR shows the title of the next task along with a detailed description. The idea here is for professors to provide detailed descriptions, including images and video, of tasks students need to perform.

Today, CadaVR contains a rough prototype of the task-driven environment. We plan to expand this feature to support tasks like simple organ dissections and comprehensive pin tests. We can’t wait to do more user testing with the task mechanism, so if you have a chance to try it out and want to send us feedback, please do!

User Testing and Lessons Learned

![cadavr-3]()

We believe that VR applications have the most potential when they use multiple input methods. While this opens up a smoother user experience, it also involves a lot of testing and iteration.

We initially built CadaVR around gestures. For example, we used line-of-sight and length-of-gaze to simulate clicking things that were out of reach, and supported a rotation gesture to simulate rotating. We quickly learned that minimizing the amount of energy required for each action was extremely important. For instance, if an object is placed too high, gazing to click could strain the neck. Also, gestures that require several repetitions, such as our initial rotation gesture that rotated the heart too slowly, can quickly tire out users.

“We quickly learned that minimizing the amount of energy required for each action was extremely important.”

On the other hand, some set of precision is important, so rotating too fast could lead to a suboptimal experience. We learned from this, then prototyped grabbing objects, which inherently supports precision rotating, and seems to feel much more natural for our users.

We also found that the distance of virtual objects is extremely important. A heart positioned 1.5 meters away may be within my reach, but will be out of reach of my younger nephew. This means we need to dynamically position things based on each user’s attributes. Fortunately, Leap Motion provides this information, which makes writing the code a lot easier.

Future

![cadavr-4]()

As we continue to expand CadaVR, one of the most important features is our lesson builder. We like to say that it’s analogous to creating a web page in WordPress or Squarespace, except we believe natural input (i.e. hands and voice) will make creating content much easier and faster. We’re building this lesson builder because we believe a platform that gives anyone the ability to create effective learning environments will improve education around the world.

New environments. We believe the environment that students work in greatly impacts how they study. The wonderful thing about virtual reality is that we can create CadaVR outside of the cadaver lab. We don’t need to simulate a room filled with dead bodies; rather, we can put a cadaver in space, in a calming grassy field, or even in a library. And given that each person is different, we want to allow each person to choose the environment that suits them best.

Physiology. Imagine grabbing a beating heart, holding it your hand, rotating it, enlarging it, and stepping inside to learn how the valves open and close and how the ventricles push blood towards outside organs. Imagine a similar experience in the lungs, the stomach, and blood cells. We plan to give content creators physiological tools so they can simulate normal and pathological functions and create compelling experiences that are not only cool, but also give students a new perspective to learn about the functions of the human body

Haptic feedback. This is the primary advantage a physical cadaver lab has over a virtual lab. Once we can simulate haptics, we can simulate what tissues feel like, both normal and pathological, as well as simulate what physiology feels like. Suitable haptics aren’t ready today, but we’ll be first in line when they are.

“We plan to give content creators physiological tools so they can simulate normal and pathological functions.”

Our long-term vision is to create a platform that gives anyone the ability to create lessons about anything. Math, science, history, architecture, construction, environmental planning – you name it. We plan to do this by creating a set of APIs that give web developers access to upload custom objects, whether it be a simple bouncing ball to help teach gravity, or a crane to help teach construction.

These custom objects will then be used by lesson curators to create compelling physics lessons, immersive construction training simulations, learning environments that teach what’s difficult to learn from two dimensional surfaces, learning environments that are not feasible to create in the physical world, and everything in between.

We want to improve education around the world, and we know we can’t do it alone. We’re extremely excited about Leap Motion and VR, and their potential impact on how people teach and learn around the globe. If you would like to learn more about CadaVR, stay updated, and/or help us take the next step, please check out our website and GitHub repo.

Team CadaVR

Ahmad Aljadaan is a PhD student studying Biomedical and Health Informatics at the University of Washington, and a software and usability engineer with 4 years experience at Stanford University. His research focus on predictive analytics, building visualization tools that help physicians make predictions about patients at risk of readmission.

Mark Laughery is a Masters student in Human-Centered Design & Engineering with experience at six tech startups in Seattle. His background is in business analysis and product management.

Ryan James is a PhD student studying Biomedical and Health Informatics at the University of Washington, a software developer with 4 years of industry experience at Microsoft, and an entrepreneur with one year of experience running a small company in 2010. His research focuses primarily on understanding how medical professionals interact, learn and collaborate in virtual reality.

The post Welcome to CadaVR: A Living Cadaver Laboratory on the Web appeared first on Leap Motion Blog.

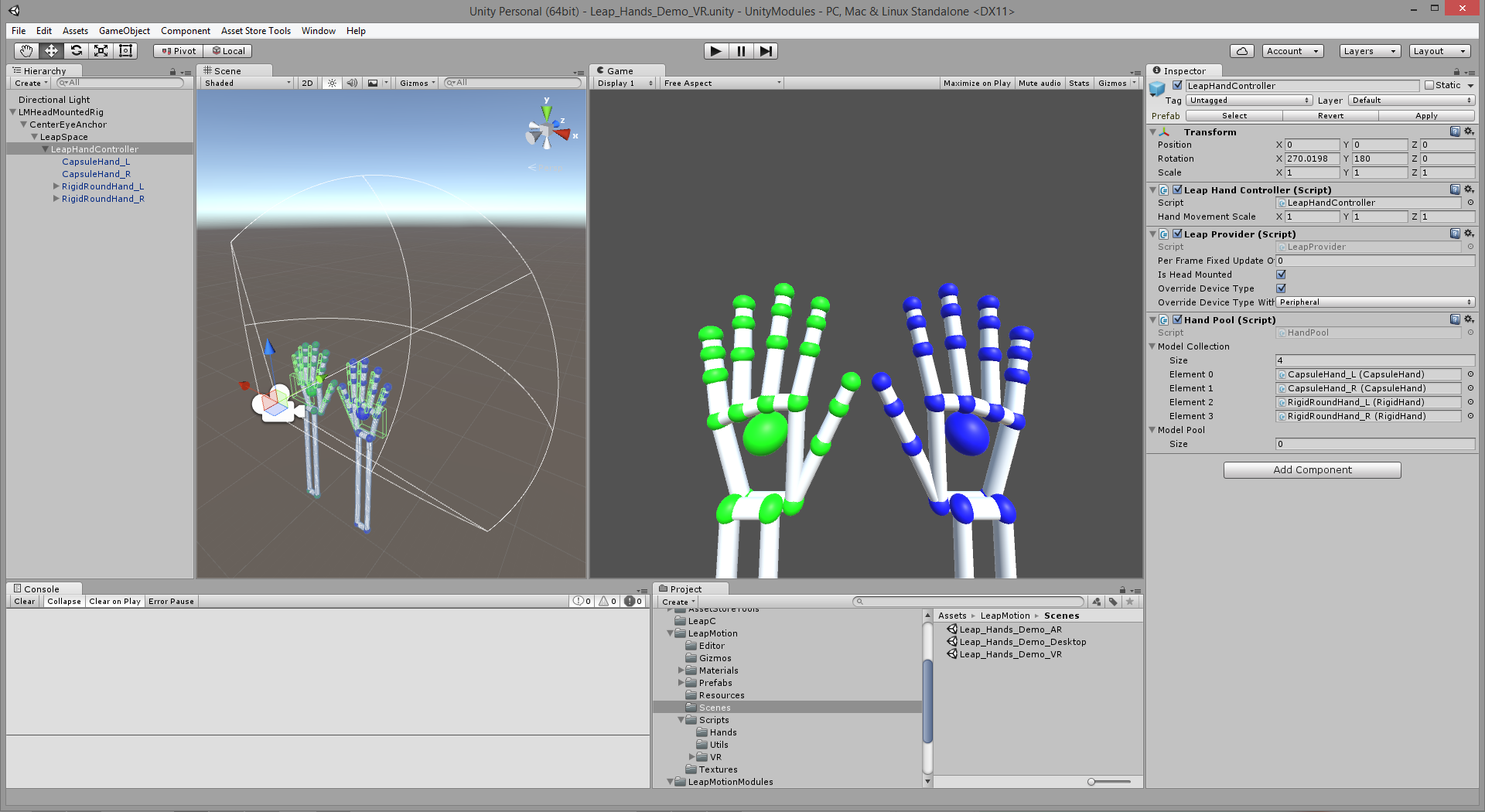

This popular community plugin is now the official plugin and brings new features like rigged character hands, Image Hands, passthrough, and built-in support for the Oculus Rift. Visit

This popular community plugin is now the official plugin and brings new features like rigged character hands, Image Hands, passthrough, and built-in support for the Oculus Rift. Visit