Leap Motion’s new Orion software represents a radical shift in our controller’s ability to see your hands. In tandem, we’ve also been giving our Unity toolset an overhaul from the ground up. The Core Asset Orion documentation has details on using the tools and the underlying API, but to help you get acquainted, here’s some background and higher-level context for how the package works and where it’s headed.

VR involves some intense performance demands. To meet these demands, we’ve been looking at every step of our pipeline. We started with a brand new LeapC client architecture for streamlined data throughput from the Leap service into Unity. This takes advantage of a new, closer-to-the-metal API that’s built in C. Then we significantly refactored the final step of our pipeline, our Unity hand control classes. This was driven by what we’ve learned from several years of developing with Leap Motion hands and an eye towards both performance and workflow.

The new Core Asset is a minimalist subset of previous releases – you could probably call it the “core” Core Asset. We’ve also started down our new roadmap of add-on modules, starting with the new Pinch Utilities Module. This will both upgrade our existing tools and expand it with new features. In the weeks to come, we’ll be releasing modules like a package of both new and updated hand models and scripts, a new update to our Leap Motion VR Widgets, and other modules that will make your toolbox more powerful than ever.

New Workflow for Hands in Unity

Performance optimization was the key driver as we started rebuilding our client side pipeline with a new LeapC implementation of our API. And since we were rearchitecting the set of C# classes that drives our hands to work with our shiny new LeapC API bindings, we also had the chance to improve some of our Unity workflow.

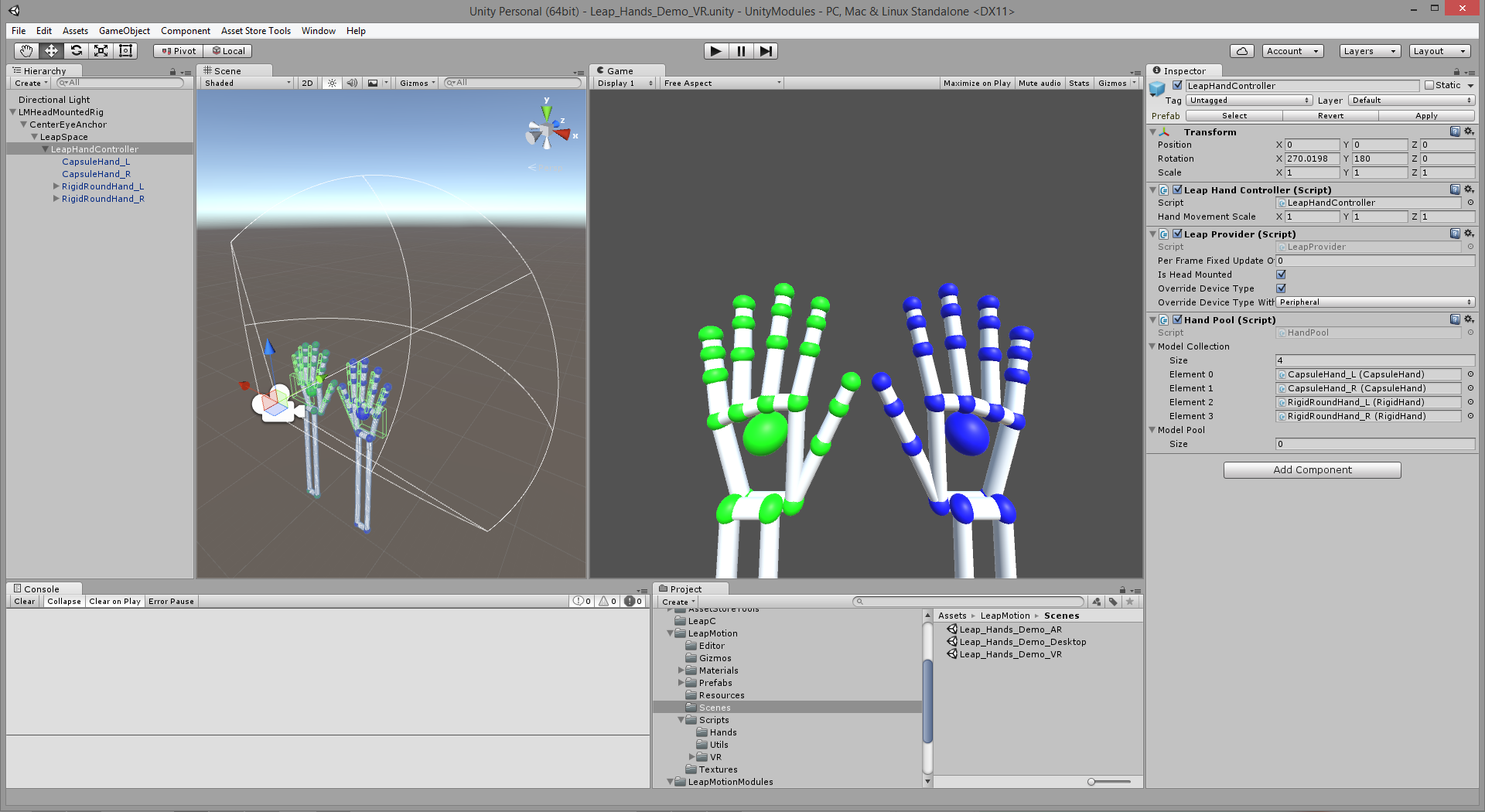

One key workflow improvement we’ve created is what we call “persistent hands.” This means that you can now see Leap Motion hand models – 3D representations with Leap IHandModel scripts attached – in the Unity Editor hierarchy window and in the scene view at editor time. We’ve done this by having a few methods in the IHandModel that execute at Editor update time and not at runtime. There are now default poses for Leap Motion hands that are sent to the hand models to initialize and pose them in the scene view.

This is huge, because now you can visualize your 3D hands compared to the rest of the objects in your scene without having to play, place your hands in front of the controller, and then pause. Pressing my mouse with my nose or elbow while holding my hands up many times over the last year drove home the value of this feature. Combined with the LeapHandController’s frustum gizmo (visible in the Scene view if you have the LeapHandController GameObject selected), this is a helpful way to gauge where and how far your hands will reach in your scene.

Another workflow enhancement is that by having our geometry + script hand assemblies as instances in our Unity scenes – instead of instantiating at runtime – it’s now easier to drive hands that may be attached to larger hierarchies. This makes it easier to experiment with driving avatars and characters.

To support this, we’ve also added an abstract class called HandTransitionBehavior.cs, which is attached to a hand model and called when the model receives or loses Leap Motion hand data. For this beta, we’ve implemented the simplest version of this possible with HandEnableDisable.cs which – wait for it – enables and disables the hand model. More importantly, developers can now easily implement their own behaviors, say fading or dropping, to trigger when a hand model transitions between tracking and not tracking.

Additionally, we’ve created an abstract interface class for our Leap HandModels, called IHandModel.cs. This new class allows developers more freedom in how you build Leap Motion hand models. So if you want to make hands out of flame particles, metaballs, or whatever inspired hands you dream up, you can wrap them in an IHandModel to drive them with Hand data. And as you build them, you’ll be able to see how Leap Motion data will affect them directly in the Editor. We’re just beginning to use this new functionality ourselves as we build our next generation of hand models.

How the New Unity Architecture Works

With this new workflow and optimizations to our class architecture, we now have a different structure to our Prefabs and their Monobehavior components. Additionally, Unity’s new VR support and the Oculus 0.8.0 runtime allow us to have simpler camera rig prefabs.

As in previous releases, LeapHandControllers are attached to these camera rig prefabs. Then, instead of instantiating hand prefabs from the Unity project’s asset collection at runtime, hand models need to be present in the hierarchy as instances. This means dragging in hand prefabs first and then dragging those instances to their respective slot on the new HandPool component, which sits alongside the new LeapHandController component.

Our Core Asset package has been, until now, anchored by the HandController class, which over time had grown to serve multiple roles. One of our first tasks in rebuilding the Core Asset was to split the roles of the old HandController into several simple smaller C# classes. For this, we took inspiration for the programming pattern called the Factory pattern which provides a nice metaphor for understanding how our Unity-side system works. In the Factory pattern, there’s an Assembler that uses a Factory to make Products.

The new, greatly simplified LeapHandController.cs acts as our Assembler. It uses our new HandPool.cs as the Factory. The HandPool’s Products are HandRepresentations, which are combinations of a 3D hand model and script assembly paired with the Leap Hand data to drive them.

You can watch this in action if you run either our the Core Asset example scenes (/Assets/LeapMotion/Scene/) with the LeapHandController GameObject selected. If you watch the HandPool component you’ll see all the hand models from the scene added to the Model Pool at start. Then, when a person’s hand begins being tracked, LeapHandController asks the HandPool for a new graphics HandRepresentation and a physics HandRepresentation. You’ll see those models removed from the Model Pool. And when the person’s hand leaves tracking, you’ll see both of the those models added back to the pool to be ready for the next Leap Hand assignment.

To flesh out this part of the system, the new LeapProvider class, attached to the same GameObject as the LeapHandController, deals with getting all the hand position data from the Leap Service. Again this is an example of removing this task from our LeapHandController to make for collection of simpler components vs. fewer but more complex, harder to understand scripts.

As always, we’re looking forward to your feedback and truly looking forward to the next batch of Leap Motion VR projects. Stayed tuned for more updates as we build new add-on modules in the weeks to come.

The post Redesigning Our Unity Core Assets: New Workflow and Architecture for Orion appeared first on Leap Motion Blog.