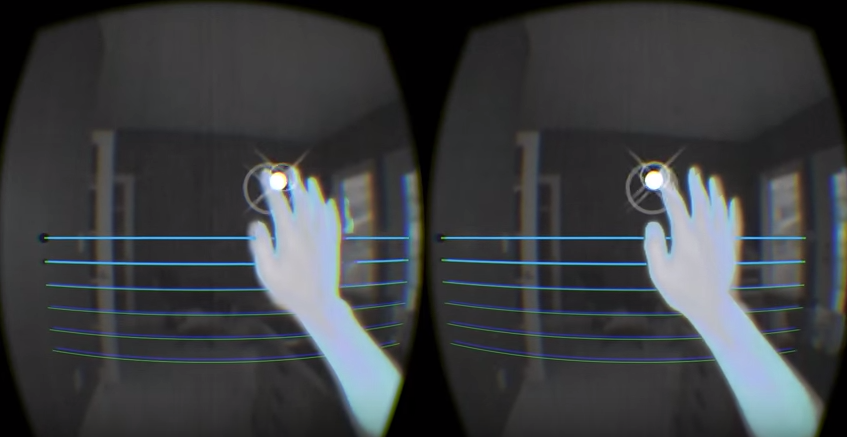

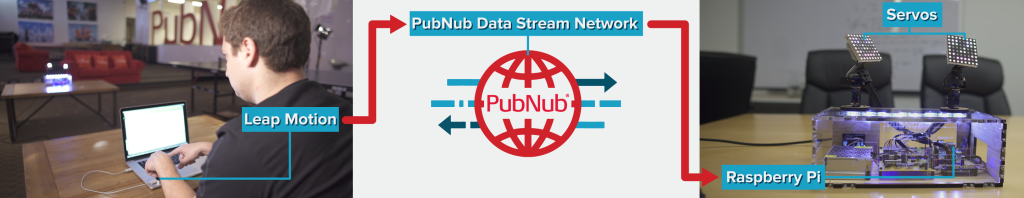

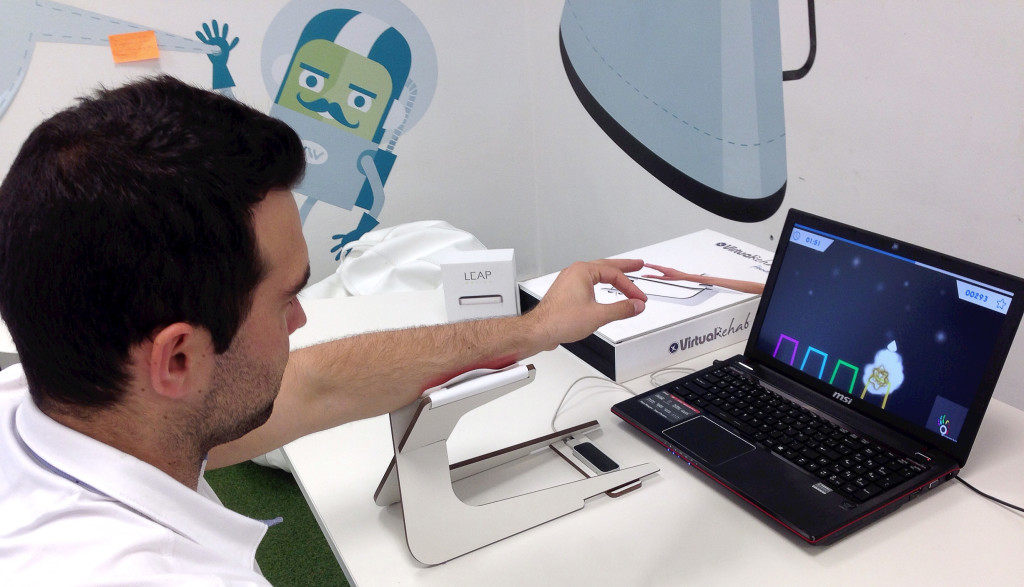

For hardware hackers, boards like Arduino and Raspberry Pi are the essential building blocks that let them mix and mash things together. But while these devices don’t have the processing power to run our core tracking software, there are many ways to bridge hand tracking input on your computer with the Internet of Things. You’ll just need to interface the computer running the Leap Motion software with your favorite dev board!

In this post, we’ll look at a couple of platforms that can get you started right away, along with some other open source examples. This is by no means an exhaustive list – Arduino’s website features hundreds of connective possibilities, from different communication protocols to software integrations. Whether you connect your board directly to your computer, or send signals over wifi, there’s always a way to hack it.

Platform Integrations

Wireless Control with Cylon.js

For wireless-enabled controllers, it’s hard to beat the speed and simplicity of a Node.js setup. Cylon.js takes it a step further with integrations for (deep breath) Arduino, Beaglebone, Intel Galileo and Edison, Raspberry Pi, Spark, and Tessel. But that’s just the tip of the iceberg, as there’s also support for various general purpose input/output devices (motors, relays, servos, makey buttons), and inter-integrated circuits.

On our Developer Gallery, you can find a Leap Motion + Arduino example that lets you turn LEDs on and off with just a few lines of code. Imagine what you could build:

"use strict";

var Cylon = require("cylon");

Cylon.robot({

connections: {

leap: { adaptor: "leapmotion" },

arduino: { adaptor: "firmata", port: "/dev/ttyACM0" }

},

devices: {

led: { driver: "led", pin: 13, connection: "arduino" }

},

work: function(my) {

my.leapmotion.on("frame", function(frame) {

if (frame.hands.length > 0) {

my.led.turnOn();

} else {

my.led.turnOff();

}

});

}

}).start();

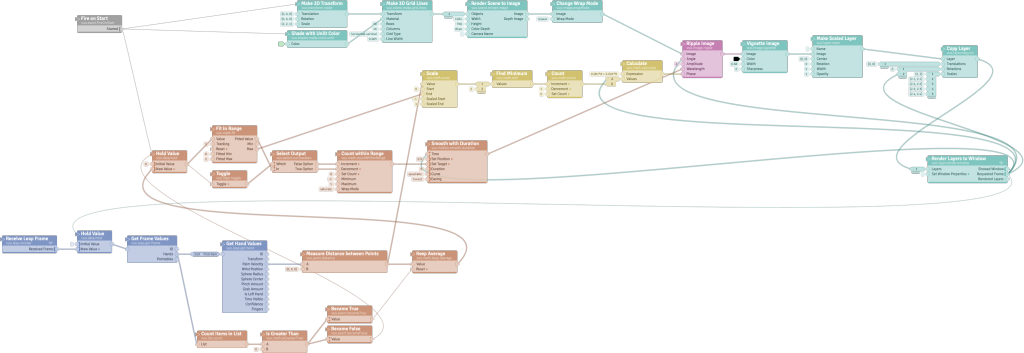

Visual Scripting with Vuo

Vuo is a visual scripting platform that features the ability to connect a wide variety of inputs and outputs. The latest version includes support for Serial I/O, which opens up access to a range of devices, including Arduino boards. Read more about Vuo in our featured blog post or learn more from their feature request thread.

Open Source Examples

Arduino + 3D Printer

This Processing sketch from Andrew Maxwell-Parish lets you control a Makerbot 3D printer with hand movements. It works by gathering the XYZ position of your fingers from the Leap Motion API, converting it into the Cartesian coordinates needed for the 3D printer, packaging it into G-code format, and sending it to the printer via an Arduino-based controller.

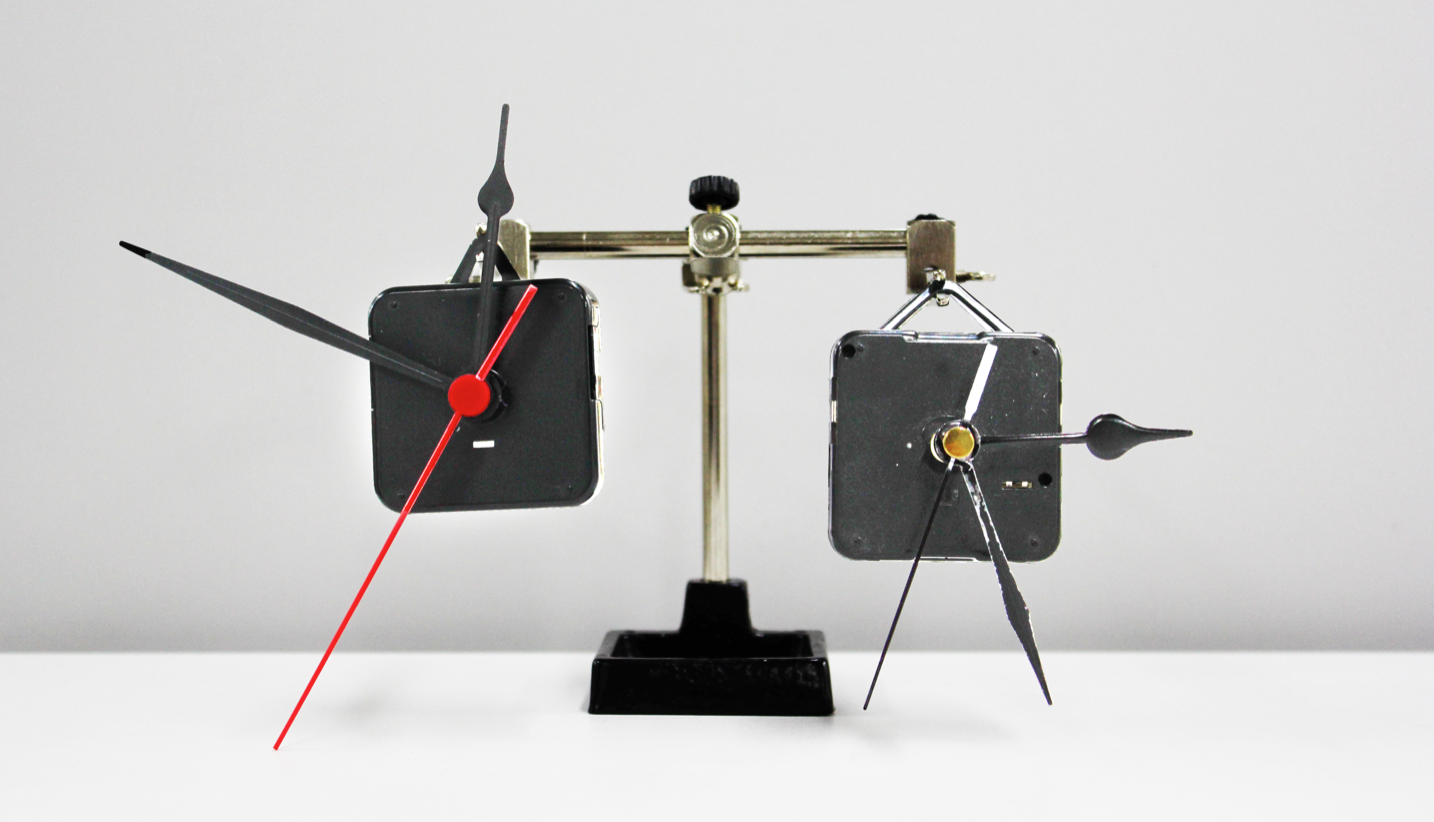

Arduino Motor Shield + Clock Motors

The Arduino Motor Shield is an additional kit that makes it easy for you to control motor direction and speed with your Arduino. (Check out this great Instructables tutorial to set it up.) Using the Motor Shield and a Processing script, interactive design student James Miller created BLAST: Time – a little sculpture with a clock that responds to people’s hand movements.

Robotic Arm

Built in Python, this award-winning hack from last year’s LA Hacks featured a robotic arm with five different motors. Leap Motion data was sent to the arm controller through a Bluetooth connection, allowing the team to place in the competition finals.

Hacking a Radio Drone

When it comes to DIY robotics, taking the sneaky route is often half the fun. Using an Arduino-to-radio interface, another LA Hacks team was able to hack a drone’s radio signal, taking control from analog to digital. According to hardware hacker Casey Spencer, “remote control through the Internet, computer-aided flight, or even – with a little more hardware – complete autonomy is now possible for the majority of consumer drones already out there.”

Are you using Leap Motion interaction to help trigger the robo-apocalypse? Let us know in the comments, or share your project on the community forums!

The post How to Integrate Leap Motion with Arduino & Raspberry Pi appeared first on Leap Motion Blog.