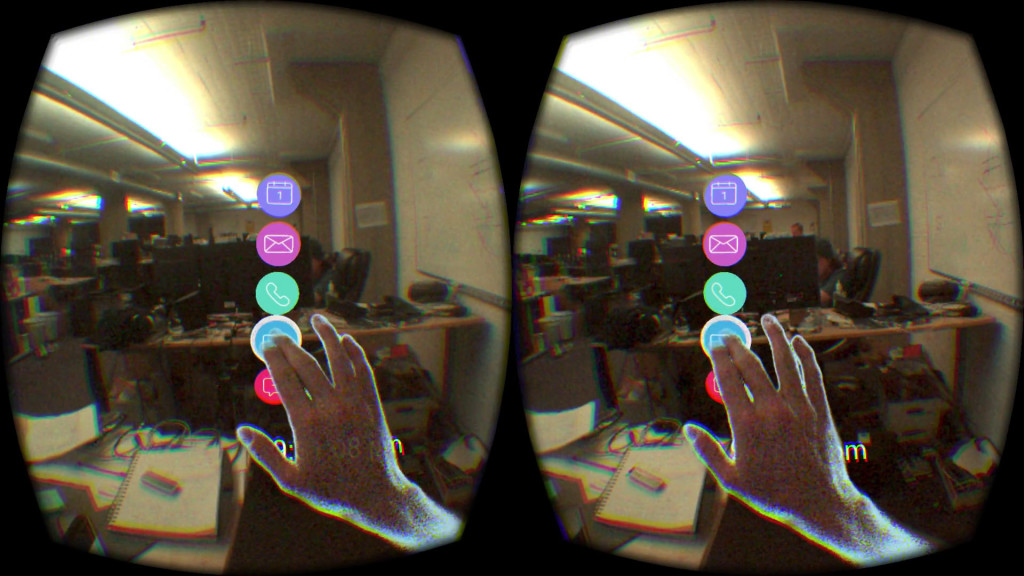

Early last month, Leap Motion kicked off our internal hackathon with a round of pitch sessions. This basically involves everyone bouncing crazy ideas off each other to see which ones would stick. One of our tracking engineers suggested using our prototype Dragonfly module to augment a physical display with virtual widgets. Our team of five ran with this concept to create AR Screen.

You’ve probably heard the rest of the story. Our team’s video got shared on /r/oculus and led to a feature on Wired. While the Wired story focuses a lot on the experience side of things – the power of spatial thinking and offices of the future – it was light on the technical details. Since we’ve heard from a lot of VR developers interested in the project, I thought I’d do a deep dive here on the blog.

Disclaimer: Lots of ugly hackathon code ahead!

Core Concepts and Resources

At Leap Motion, we spend a lot of time experimenting with new ways of interacting with technology, and we often run into the same problem. Flat digital interfaces were not designed for hands.

At Leap Motion, we spend a lot of time experimenting with new ways of interacting with technology, and we often run into the same problem. Flat digital interfaces were not designed for hands.

With this in mind, we designed AR Screen so that much of the input was still driven by the keyboard and mouse. This kind of approach is one way that we imagine transitioning from classic 2D interfaces to truly 3D operating systems. By stretching and augmenting the traditional interface, a screen in the real world can be more than a mere window into the digital – it can be a centerpiece of an experience that expands far beyond the borders of the screen.

AR Screen wasn’t built from scratch, as we were able to reuse some existing code from Shortcuts and a couple of other demos. We mashed them together with several libraries and added Dragonfly support. At a high level the architecture had a few different modules running asynchronously:

Libraries used: SFML, LeapGL, Autowiring, Eigen, FreeImage, Glew, Leap Motion SDK, Oculus SDK

Windows

One of the features that helped make the experience feel more alive was to capture window textures in real time.

The main workhorse of the window capture is a background thread that performs round-robin texture capture. Foreground windows are captured every iteration, and background windows are updated less often.

void WindowManager::Run() {

// takes snapshots in a background thread, GPU uploads are done in the main Render thread

while (!ShouldStop()) {

int minZ, maxZ;

GetZRange(minZ, maxZ);

if (!m_Windows.empty()) {

std::unique_lock<std::mutex> lock(m_WindowsMutex);

m_RoundRobinCounter = (m_RoundRobinCounter+1) % m_Windows.size();

int curCounter = 0;

for (const auto& it : m_Windows) {

const int z = it.second->m_Window.GetZOrder();

const bool updateTexture = (curCounter == m_RoundRobinCounter

|| z == maxZ || it.second->m_ForceUpdate);

if (updateTexture) {

it.second->m_Window.TakeSnapshot();

it.second->m_HaveSnapshot = true;

}

curCounter++;

}

}

std::this_thread::sleep_for(std::chrono::milliseconds(10));

}

}

The process of displaying the window textures is broken up into two parts. The first part is the TakeSnapshot function called in the code above. This function uses the Windows API to get the device context of a particular window, and then copy over the texture into a separate buffer.

int OSWindowWin::TakeSnapshot(void) {

HDC hdc = GetWindowDC(hwnd);

auto cleanhdc = MakeAtExit([&] {ReleaseDC(hwnd, hdc); });

SIZE bmSz;

{

RECT rc;

GetWindowRect(hwnd, &rc);

bmSz.cx = rc.right - rc.left;

bmSz.cy = rc.bottom - rc.top;

}

if (!bmSz.cx || !bmSz.cy)

// Cannot create a window texture, window is gone

return m_counter;

if(m_szBitmap.cx != bmSz.cx || m_szBitmap.cy != bmSz.cy) {

BITMAPINFO bmi;

auto& hdr = bmi.bmiHeader;

hdr.biSize = sizeof(bmi.bmiHeader);

hdr.biWidth = bmSz.cx;

hdr.biHeight = -bmSz.cy;

hdr.biPlanes = 1;

hdr.biBitCount = 32;

hdr.biCompression = BI_RGB;

hdr.biSizeImage = 0;

hdr.biXPelsPerMeter = 0;

hdr.biYPelsPerMeter = 0;

hdr.biClrUsed = 0;

hdr.biClrImportant = 0;

// Create a DC to be used for rendering

m_hBmpDC.reset(CreateCompatibleDC(hdc));

// Create the bitmap where the window will be rendered:

m_hBmp.reset(CreateDIBSection(hdc, &bmi, DIB_RGB_COLORS, &m_phBitmapBits, nullptr, 0));

// Attach our bitmap where it needs to be:

SelectObject(m_hBmpDC.get(), m_hBmp.get());

// Update the size to reflect the new bitmap dimensions

m_szBitmap = bmSz;

}

while (m_lock.test_and_set(std::memory_order_acquire)) // acquire lock

; // spin

// Bit blit time to get at those delicious pixels

BitBlt(m_hBmpDC.get(), 0, 0, m_szBitmap.cx, m_szBitmap.cy, hdc, 0, 0, SRCCOPY);

m_lock.clear(std::memory_order_release); // release lock

return ++m_counter;

}

The second part uploads the captured window textures to the graphics card, to be drawn by the OpenGL renderer. This process occurs in the main render thread, so access to the capture texture buffers is protected using locks. Buffer allocations on the GPU are done lazily, so if the incoming texture is the same size as the previous frame, it reuses the existing memory.

std::shared_ptr<ImagePrimitive> OSWindowWin::GetWindowTexture(std::shared_ptr<ImagePrimitive> img) {

if (!m_phBitmapBits) {

return img;

}

// See if the texture underlying image was resized or not. If so, we need to create a new texture

std::shared_ptr<Leap::GL::Texture2> texture = img->Texture();

if (texture) {

const auto& params = texture->Params();

if (params.Height() != m_szBitmap.cy || params.Width() != m_szBitmap.cx) {

texture.reset();

}

}

Leap::GL::Texture2PixelData pixelData{ GL_BGRA, GL_UNSIGNED_BYTE, m_phBitmapBits, static_cast<size_t>(m_szBitmap.cx * m_szBitmap.cy * 4) };

if (texture) {

while (m_lock.test_and_set(std::memory_order_acquire)) // acquire lock

; // spin

texture->TexSubImage(pixelData);

m_lock.clear(std::memory_order_release); // release lock

} else {

Leap::GL::Texture2Params params{ static_cast<GLsizei>(m_szBitmap.cx), static_cast<GLsizei>(m_szBitmap.cy) };

params.SetTarget(GL_TEXTURE_2D);

params.SetInternalFormat(GL_RGB8);

params.SetTexParameteri(GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

params.SetTexParameteri(GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

params.SetTexParameteri(GL_TEXTURE_MAG_FILTER, GL_LINEAR);

params.SetTexParameteri(GL_TEXTURE_MIN_FILTER, GL_LINEAR_MIPMAP_LINEAR);

while (m_lock.test_and_set(std::memory_order_acquire)) // acquire lock

; // spin

texture = std::make_shared<Leap::GL::Texture2>(params, pixelData);

m_lock.clear(std::memory_order_release); // release lock

img->SetTexture(texture);

img->SetScaleBasedOnTextureSize();

}

texture->Bind();

glGenerateMipmap(GL_TEXTURE_2D);

texture->Unbind();

return img;

}

When manipulating the virtual windows with your hands, the underlying operating system also receives those move/resize events. In order to facilitate this, we map the 3D interaction coordinates into these 2D Windows API calls:

void OSWindowWin::SetPositionAndSize(const OSPoint& pos, const OSSize& size) {

SetWindowPos(hwnd, HWND_TOP, pos.x, pos.y, size.width, size.height, 0);

}

void OSWindowWin::GetPositionAndSize(OSPoint& pos, OSSize& size) {

RECT rect;

GetWindowRect(hwnd, &rect);

pos.x = (float)rect.left;

pos.y = (float)rect.top;

size.width = (float)(rect.right - rect.left);

size.height = (float)(rect.bottom - rect.top);

}

The window capture and layout could definitely use some work. For instance, we could capture the windows at a higher DPI to make small text easier to read. To make a larger workspace possible, it would be more practical to rotate the windows toward the center instead of all facing the same direction. And finally, it’s worth mentioning that the capture code above won’t work with certain types of apps (like fullscreen games) and is best suited for individual OS windows.

Leap Motion

The project used the public Leap Motion SDK with standard tracking to receive hand tracking and video passthrough data. The Dragonfly module allowed us to add higher-resolution images with full color, which made the overall experience more immersive.

The main interaction code handles interactions with the newsfeed and windows, and detects the gestures to activate and deactivate the virtual desktop.

void Scene::leapInteract(float deltaTime) {

AutowiredFast<WindowManager> manager;

if (manager) {

for (auto& it : manager->m_Windows) {

FakeWindow& wind = *it.second;

wind.Interact(*(manager->m_WindowTransform), m_TrackedHands, deltaTime);

}

}

double scrollVel = 0;

for (const auto& it : m_TrackedHands) {

const HandInfo& trackedHand = *it.second;

HandInfo::IntersectionVector intersections = trackedHand.IntersectRectangle(*m_NewsFeedRect);

for (const auto& intersection : intersections) {

scrollVel += 0.25 * intersection.velocity.y();

}

}

if (std::fabs(scrollVel) > 0.01) {

m_ScrollVel.SetSmoothStrength(0.1f);

} else {

m_ScrollVel.SetSmoothStrength(0.8f);

}

m_ScrollVel.SetGoal(scrollVel);

m_ScrollVel.Update(deltaTime);

m_FeedScroll += deltaTime * m_ScrollVel.Value();

if (manager) {

// detect activation gesture

int numActivatingHands = 0;

int numDeactivatingHands = 0;

if (m_TrackedHands.size() >= 2) {

for (const auto& it : m_TrackedHands) {

const HandInfo& trackedHand = *it.second;

const Leap::Hand& hand = trackedHand.GetLastSeenHand();

const bool confident = trackedHand.GetConfidence() > 0.8;

const bool facingOutward = hand.palmNormal().y > 0.8f;

const bool fast = hand.palmVelocity().magnitude() > 600;

const float yNorm = hand.palmVelocity().normalized().y;

const bool pulling = yNorm < -0.8f;

const bool pushing = yNorm > 0.8f;

if (confident && facingOutward && fast) {

if (pulling) {

numActivatingHands++;

} else if (pushing) {

numDeactivatingHands++;

}

}

}

}

const double gestureTime = 0.15;

if (numActivatingHands == 2) {

if (!m_ActivationGesture) {

m_GestureStart = Globals::curFrameTime;

m_ActivationGesture = true;

} else {

const double timeDiff = (Globals::curFrameTime - m_GestureStart).count();

if (timeDiff >= gestureTime && !manager->m_Active) {

manager->Activate();

m_ActivationGesture = false;

}

}

} else {

m_ActivationGesture = false;

}

if (numDeactivatingHands == 2) {

if (!m_DeactivationGesture) {

m_GestureStart = Globals::curFrameTime;

m_DeactivationGesture = true;

} else {

const double timeDiff = (Globals::curFrameTime - m_GestureStart).count();

if (timeDiff >= gestureTime && manager->m_Active) {

manager->Deactivate();

m_DeactivationGesture = false;

}

}

} else {

m_DeactivationGesture = false;

}

}

}

When the gestures are triggered, each window moves and fades according to a smoothing filter. The windows move in Z order, such that frontmost windows are first to activate but last to deactivate.

void WindowManager::Activate() {

int minZ, maxZ;

GetZRange(minZ, maxZ);

for (auto& it : m_Windows) {

const int z = it.second->m_Window.GetZOrder();

const float zRatio = static_cast<float>(z - minZ) / static_cast<float>(maxZ - minZ);

const float smooth = baseSmooth + smoothVariation * (1.0f - zRatio);

it.second->m_PositionOffset.SetSmoothStrength(smooth);

it.second->m_Opacity.SetSmoothStrength(smooth);

it.second->m_Opacity.SetGoal(1.0f);

it.second->m_PositionOffset.SetGoal(Eigen::Vector3d::Zero());

}

m_Active = true;

}

void WindowManager::Deactivate() {

int minZ, maxZ;

GetZRange(minZ, maxZ);

for (auto& it : m_Windows) {

const int z = it.second->m_Window.GetZOrder();

const float zRatio = static_cast<float>(z - minZ) / static_cast<float>(maxZ - minZ);

const float smooth = baseSmooth + smoothVariation * zRatio;

it.second->m_PositionOffset.SetSmoothStrength(smooth);

it.second->m_Opacity.SetSmoothStrength(smooth);

it.second->m_Opacity.SetGoal(0.0f);

it.second->m_PositionOffset.SetGoal(Eigen::Vector3d(0, 0, -1000));

}

m_Active = false;

}

The smoothing filter used is an iterated exponential filter. Compared to a single exponential filter, start and stop transitions are much smoother. This recursive filtering leads to a Poisson distribution of data weights.

// This is a simple smoothing utility class that will perform Poisson smoothing.

// The class is templated and can be used with double, float, Eigen::Vector3, or anything

// that overloads addition and scalar multiplication.

// When NUM_ITERATIONS is 1, the functionality is the same as exponential smoothing.

// WARNING - Due to some vagueries of Possion smoothing & floating point math,

// This is not guaranteed to ever actually reach the goal value, Zeno's Paradox style

template <class T, int _NUM_ITERATIONS = 5>

class Smoothed {

public:

static const int NUM_ITERATIONS = _NUM_ITERATIONS;

//No default constructor so that we can avoid nasty uninitialized memory problems

Smoothed(const T& initialValue, float smoothStrength = 0.8f, float targetFramerate = 100.0f) :

m_TargetFramerate(targetFramerate), m_SmoothStrength(smoothStrength) {

SetImmediate(initialValue);

}

// const getters

operator T() const { return Value(); }

const T& Value() const { return m_Values[NUM_ITERATIONS-1]; }

const T& Goal() const { return m_Goal; }

// setters to control animation

void SetGoal(const T& goal) { m_Goal = goal; }

// sets the goal and value to the same number

void SetImmediate(const T& value) {

m_Goal = value;

for (int i = 0; i<NUM_ITERATIONS; i++) {

m_Values[i] = value;

}

}

void SetSmoothStrength(float smooth) { m_SmoothStrength = smooth; }

DEPRECATED_FUNC void SetInitialValue(const T& value) {

for (int i=0; i<NUM_ITERATIONS; i++) {

m_Values[i] = value;

}

}

// main update function, must be called every frame

void Update(float deltaTime) {

const float dtExponent = deltaTime * m_TargetFramerate;

const float smooth = std::pow(m_SmoothStrength, dtExponent);

assert(smooth >= 0.0f && smooth <= 1.0f);

for (int i=0; i<NUM_ITERATIONS; i++) {

const T& prev = i == 0 ? m_Goal : m_Values[i-1];

m_Values[i] = smooth*m_Values[i] + (1.0f-smooth)*prev;

}

}

private:

T m_Values[NUM_ITERATIONS];

T m_Goal;

float m_TargetFramerate;

float m_SmoothStrength;

};

The last piece of Leap Motion-specific code was retrieving the video passthrough textures. For the peripheral and Dragonfly alike, passthrough by checking the image width, with different formats used for grayscale vs. color. Processing the distortion maps is the same for both.

void ImagePassthrough::updateImage(int idx, const Leap::Image& image) {

GLenum format = (image.width() == 640) ? GL_LUMINANCE : GL_RGBA;

m_Color = (format == GL_RGBA);

std::shared_ptr<Leap::GL::Texture2>& tex = m_Textures[idx];

const unsigned char* data = image.data();

const int width = image.width();

const int height = image.height();

const int bytesPerPixel = image.bytesPerPixel();

const size_t numBytes = static_cast<size_t>(width * height * bytesPerPixel);

Leap::GL::Texture2PixelData pixelData(format, GL_UNSIGNED_BYTE, data, numBytes);

if (!tex || numBytes != m_ImageBytes[idx]) {

Leap::GL::Texture2Params params(static_cast<GLsizei>(width), static_cast<GLsizei>(height));

params.SetTarget(GL_TEXTURE_2D);

params.SetInternalFormat(format);

params.SetTexParameteri(GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

params.SetTexParameteri(GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

params.SetTexParameteri(GL_TEXTURE_MAG_FILTER, GL_LINEAR);

params.SetTexParameteri(GL_TEXTURE_MIN_FILTER, GL_LINEAR);

tex = std::shared_ptr<Leap::GL::Texture2>(new Leap::GL::Texture2(params, pixelData));

m_ImageBytes[idx] = numBytes;

} else {

tex->TexSubImage(pixelData);

}

}

void ImagePassthrough::updateDistortion(int idx, const Leap::Image& image) {

std::shared_ptr<Leap::GL::Texture2>& distortion = m_Distortion[idx];

const float* data = image.distortion();

const int width = image.distortionWidth()/2;

const int height = image.distortionHeight();

const int bytesPerPixel = 2 * sizeof(float); // XY per pixel

const size_t numBytes = static_cast<size_t>(width * height * bytesPerPixel);

Leap::GL::Texture2PixelData pixelData(GL_RG, GL_FLOAT, data, numBytes);

if (!distortion || numBytes != m_DistortionBytes[idx]) {

Leap::GL::Texture2Params params(static_cast<GLsizei>(width), static_cast<GLsizei>(height));

params.SetTarget(GL_TEXTURE_2D);

params.SetInternalFormat(GL_RG32F);

params.SetTexParameteri(GL_TEXTURE_WRAP_S, GL_CLAMP_TO_EDGE);

params.SetTexParameteri(GL_TEXTURE_WRAP_T, GL_CLAMP_TO_EDGE);

params.SetTexParameteri(GL_TEXTURE_MAG_FILTER, GL_LINEAR);

params.SetTexParameteri(GL_TEXTURE_MIN_FILTER, GL_LINEAR);

distortion = std::shared_ptr<Leap::GL::Texture2>(new Leap::GL::Texture2(params, pixelData));

m_DistortionBytes[idx] = numBytes;

} else {

distortion->TexSubImage(pixelData);

}

}

Bringing in real-life hands with the video passthrough also helps make for a better experience with augmented reality, compared to drawing a fully rigged/skinned hand. That’s the thinking behind our Image Hands assets, which we launched for Unity back in May. Before that, however, we did some early experiments with this approach in C++. As you might guess, the Image Hands implementation in AR Screen isn’t nearly as advanced as the one in our Unity asset.

AR Screen was a fun experiment, and we’re really humbled that something we created in a couple of days has inspired people. As VR continues to unfold into the mainstream, we can’t wait to see what kinds of interfaces and experiences are on the horizon.

AR Screen was created by Kevin Chin, Kyle Hay, Jon Marsden, Hua Yang, and me.

The post Under the Hood: The Leap Motion Hackathon’s Augmented Reality Workspace appeared first on Leap Motion Blog.